This is a long and hopefully interesting story about how I resolved the inability to stream Disney Plus on my IPv6 enabled internet connection that had a non-standard MTU of 1492 bytes.

The short summary is:

- Zen Internet, GB appears to support Layer 2 MTU of 1508. Just set your WAN interface MTU accordingly, and your PPPoE session when established will allow you to continue to advertise an MTU of 1500 bytes for IP (TCP/IP), which most apps and services on the internet prefer.

- You may not have to disable IPv6 if you’re having issues with streaming Disney Plus, it’s probably just getting tripped up if you have a lower MTU than 1500.

And now for the rest of the fun story.

I recently re-subscribed to Disney Plus so I could catch up on new seasons of my favourite series. I settled in on a saturday evening, followed their email, subscribed for one month of the highest quality picture they offer. Login on my TV to watch, the cursor spins for a very long time, then tells me there was an error, and that I should contact their subscription support.

I chatted with two different people because I was using my mobile phone, and whenever my screen was locked because I needed to reboot something or other at the suggestion of their support personnel, the app would get suspended, and on resume, the chat session would be lost.

On the following day, I used my PC to start a chat session with the support team, and this time I chose Technical Support. Got a knowledgeable person who went through several tests, turn off wifi, reboot router etc all to no avail. I had a sneaky feeling that something about my internet connection’s MTU was causing trouble for Disney Plus, or some of it’s content delivery networks. I wasn’t supper confident about this however, since I couldn’t believe that a network as large as Disney Plus would fall prey to something so basic, especially since I had been an on and off customer of Disney plus for nearly 3 years without trouble. It couldn’t be IPv6 either, since I’ve also had IPv6 for 3 years as well.

My PS5 was the only thing that worked consistently, and I knew that the PS5 even though it accepted IPv6 addressing, generally had an OS level preference for IPv4, which the Disney Plus app was happy about. My PC would sometimes work, my phone didn’t work. All these devices were happy the last time I had a subscription, and nothing about my network had changed, so it was likely something that Disney Plus had introduced or changed.

Since I had a few hours to spare after a busy week and weekend, I decided to delve into the matter some more. Wireshark on my PC and packet captures on my router suggested that ICMP was working as expected, sending “packet too big” messages as required, however, a lot of the connections I attributed to Disney Plus seemed to stall after the ICMP message was sent by my router. The client would retry a few times, and then eventually the app would show a message advising you to contact support.

I observed that the app was actually trying to establish IPv6 connections to the relevant content servers, which I could tell from inspecting the TLS Client Hello packets, as they had distinctive domain names. Despite what Disney Plus help pages say, IPv6 is in use, and surely, they can’t expect me to disable IPv6 when they *should* design their app and infrastructure to avoid using IPv6 if they weren’t ready for it.

My ISP is currently Zen Internet, GB, and like so many ISPs in the UK, they use PPPoE, and since PPPoE has an overhead of around 8 bytes per packet, the MTU on internet bound connections in my home is lowered by 8, which is not the typical convention on the internet. However, with ICMP working correctly, this should pose no problem with any modern apps or services. I lived with this fact, having inspected network traffic and confirmed that it takes about 1ms after connection established for the relevant ICMP message is sent by my router to both ends of the connection… and I thought that’s not bad. In practice, the way TCP handles packet loss actually adds a slightly longer delay than 1ms LAN latency would imply, but nothing frustrating enough to act on.

Well, since I wanted to watch Disney Plus, this was finally the time to investigate how to address this in the hopes that it might fix Disney Plus streaming too.

I did some Google searches, and found some speculative information that Zen Internet will accept an a Layer 2 MTU (Ethernet) of 1508 in order to compensate for the overhead of PPPoE. Heh! Really? It’s that simple? Why didn’t I bother to check this two years ago?

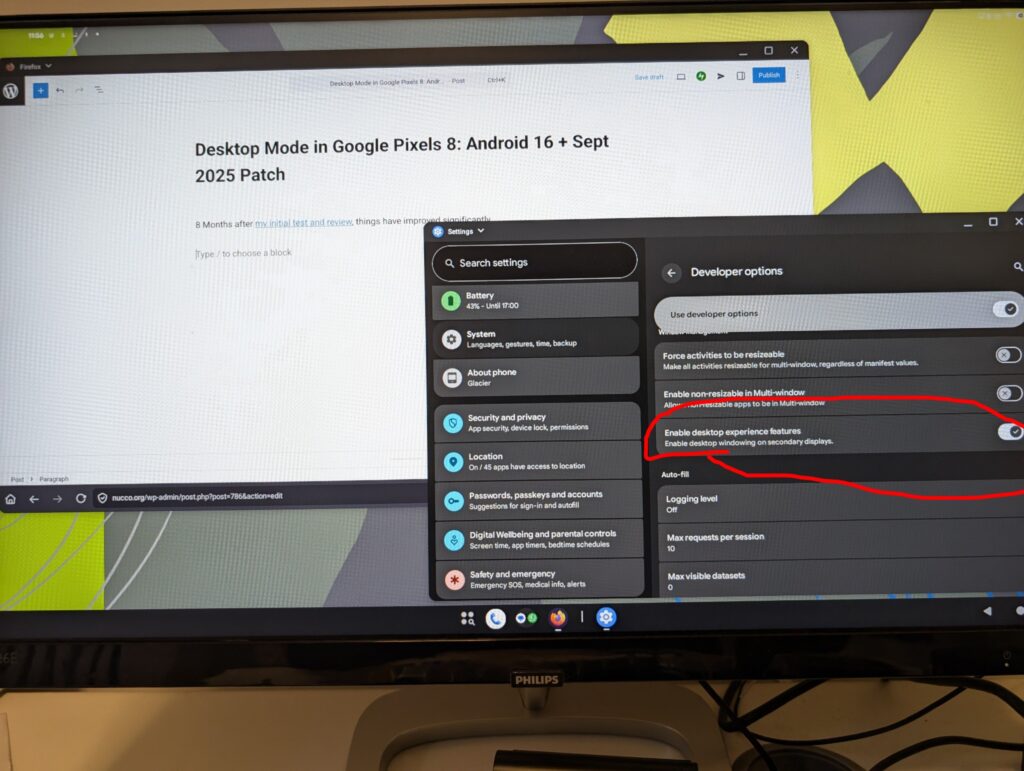

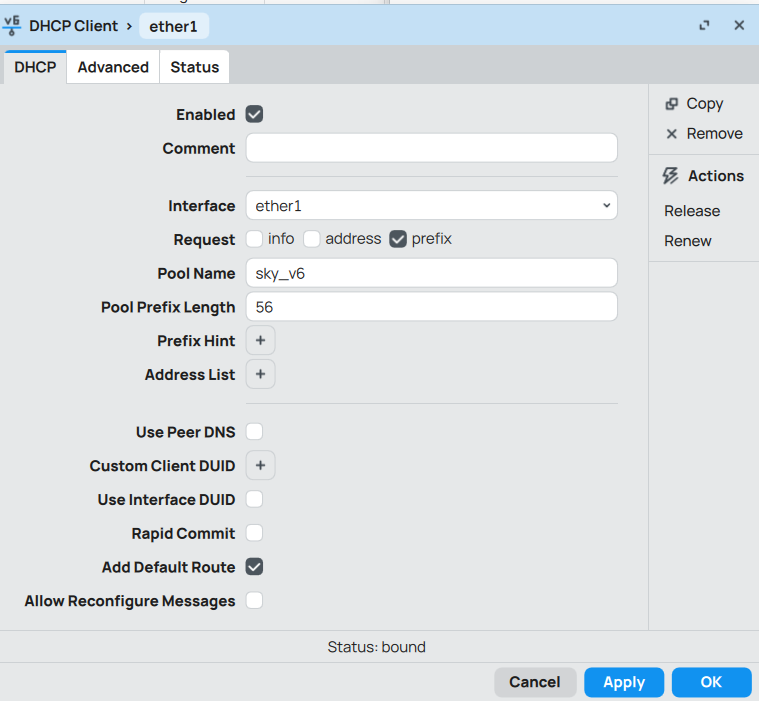

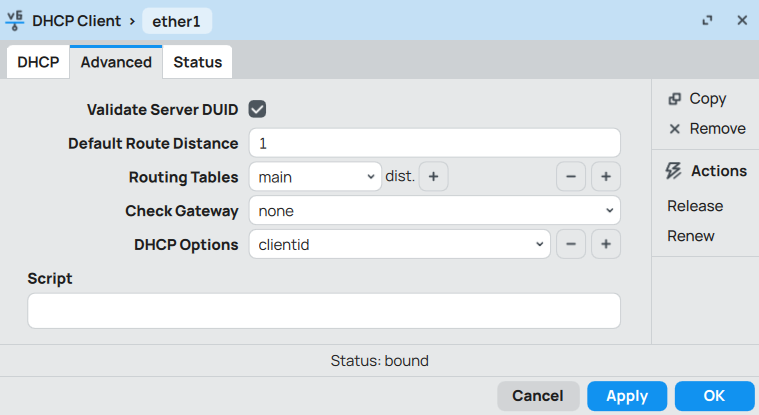

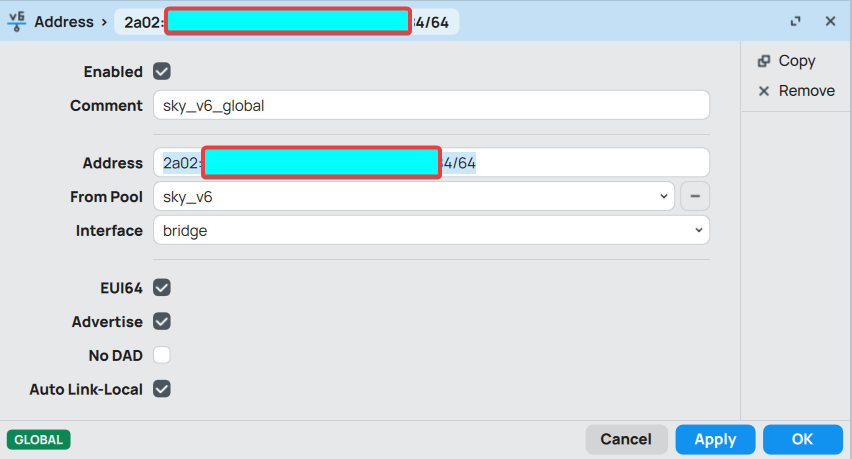

Anyway, logged into my Mikrotik router, tweaked the L2 MTU for “ether1” which is acting as my WAN port up by +8 bytes. PPPoE session drops and restarts, and voila! MTU is now 1500 bytes.

Okay, that’s good. No more wasting precious milliseconds retransmitting packets due to ICMP packet too big. Great. I launch the Disney Plus app on my phone, it feels snappier than before, and instantly starts streaming.

Wow. I could have done this two freaking years ago 🙂

The moral of this story is that the Disney Plus app and infrastructure development team probably needs to pay more attention into how their infrastructure handles variable MTU values on the internet. Their app seems to suffer from significantly higher latency when used on networks with the non-typical MTU, and in addition, some of the servers in their video delivery CDN appear unable to deal with path MTU discovery when processing IPv6 traffic. Hopefully one of you stumbles upon this blog and puts some effort into improving this. Netflix and Youtube handle this stuff flawlessly, and there’s no reason why you cannot. Also, if you really don’t want users to use IPv6 on your service, don’t advertise AAAA records :).

Like this:

Like Loading...